Minimax-Bayes Reinforcement Learning

Abstract

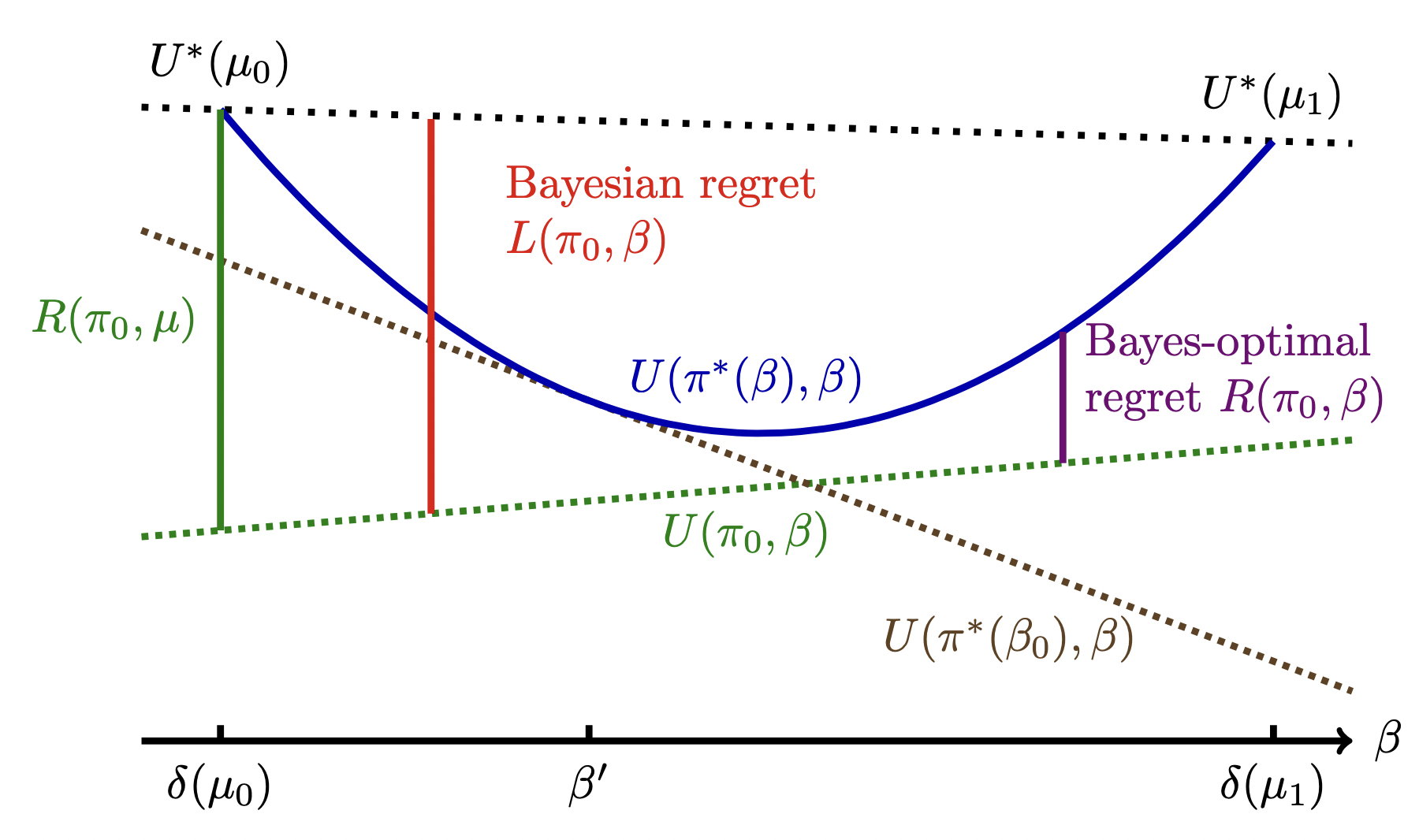

While the Bayesian decision-theoretic framework offers an elegant solution to the problem of decision making under uncertainty, one question is how to appropriately select the prior distribution. One idea is to employ a worst-case prior. However, this is not as easy to specify in sequential decision making as in simple statistical estimation problems. This paper studies (sometimes approximate) minimax-Bayes solutions for various reinforcement learning problems to gain insights into the properties of the corresponding priors and policies. We find that while the worst-case prior depends on the setting, the corresponding minimax policies are more robust than those that assume a standard (i.e. uniform) prior.

BibTeX

@inproceedings{buening2023minimax,

title={Minimax-Bayes Reinforcement Learning},

author={Buening, Thomas Kleine and Dimitrakakis, Christos and Eriksson, Hannes and Grover, Divya and Jorge, Emilio},

booktitle={International Conference on Artificial Intelligence and Statistics},

pages={7511--7527},

year={2023},

organization={PMLR}

}